Argonne’s Aurora supercomputer to help scientists advance dark matter research

New exascale supercomputer will enable large-scale simulations and machine learning techniques to shed light on dark matter.

The U.S. Department of Energy’s (DOE) Argonne National Laboratory is building one of the nation’s first exascale systems, Aurora. To prepare codes for the architecture and scale of the new supercomputer, 15 research teams are taking part in the Aurora Early Science Program (ESP) through the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science user facility. With access to pre-production time on the system, these researchers will be among the first in the world to use Aurora for science.

Dark matter makes up more than five times the amount of visible matter in the universe. Despite its crucial role in forming galaxies and shaping the cosmos, scientists still have little idea about the nature of this mysterious substance.

“A detector will basically just count and hopefully see some number of dark-matter interactions, but it won’t tell you about those interactions … So what we’re really aiming for here, is after these experiments have made some detections, what can we then infer about the actual nature of dark matter.”

William Detmold, professor of physics at MIT

The “dark” in dark matter simply refers to the fact that it does not reflect or emit light, making it extremely challenging to detect. But it’s not just emptiness out there; all that darkness has mass, and that mass keeps planets and stars properly in their orbits through space.

“Dark matter is really one of the biggest puzzles in physics at the moment,” said William Detmold, a professor of physics at the Massachusetts Institute of Technology (MIT).

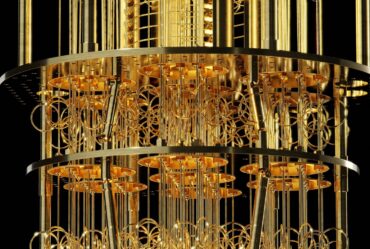

While researchers across the planet are toiling away in laboratories above ground and below, in space and underwater, trying to find just the right testing environments and materials to identify dark matter, Detmold and fellow MIT professor Phiala Shanahan are preparing to use Argonne’s Aurora exascale supercomputer and artificial intelligence (AI) to perform massive simulations that provide accurate predictions and insights into the behavior of particles and forces involved in experiments. Exascale refers to supercomputers that are capable of performing at least one exaflop or a billion billion calculations per second. Built in collaboration with Intel and Hewlett Packard Enterprise, Aurora will be one of the world’s fastest supercomputers for open science.

To enable the simulations, Detmold and Shanahan’s Aurora ESP project is helping bring a well-established physics theory known as Lattice QCD (quantum chromodynamics), an application with a long history in high performance computing (HPC), into the exascale era. Their work addresses the difficulty of observing something that is presently unobservable, calculating the expected responses of laboratory experiments using exascale computing power and AI to dramatically speed up the process.

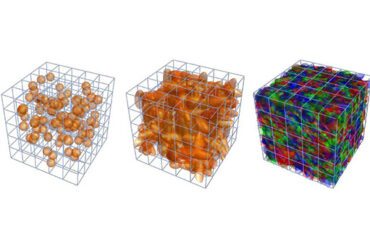

Specifically, the team is working to use machine learning to enhance the efficiency of sampling gluon field configurations within the mathematical framework of Lattice QCD. This capability will help improve the speed and accuracy of calculations to explore particle interactions and fundamental physics phenomena, providing insights relevant to the study of dark matter.

Detecting dark matter

Dark matter is undetected matter separate from the protons, electrons, neutrons and other particles that are described by what scientists call the Standard Model of physics. In the last 70 years, even smaller particles than protons and neutrons have been discovered, like quarks, but dark matter is something completely different altogether.

“When we talk about the Standard Model, we focus on things that are at a very small scale — smaller than the atom basically,” Detmold said. “Our team’s research is based on the theory of quantum chromodynamics, which explains the way quarks interact with one another inside the nucleus of an atom. We use Lattice QCD simulations to try to understand how those subatomic reactions work and to determine the potential interactions with dark matter.”

Testing for dark matter is extremely challenging. The trick isn’t just finding it; it’s removing all the other background interactions with ordinary matter to see what’s left that has proved so difficult. It’s not just extraneous matter that’s the problem, it’s also extraneous radiation. That’s why some experimentalists are trying to create ultra-precise detectors deep underground shielded from all forms of radiation, such as the James Bond-like laboratory at the Sanford Underground Research Facility. But computational physicists are engaged in a different task.

“A detector will basically just count and hopefully see some number of dark matter interactions, but it won’t tell you about those interactions,” Detmold said. “So, what we’re really aiming for here, is after these experiments have made some detections, what can we then infer about the actual nature of dark matter.”

Cracking the code for exascale

Lattice QCD is not a new concept; some version of quantum chromodynamics has been around since the discovery of the quark. Lattice QCD codes have run on powerful supercomputers for many years, driven in part by the U.S. Lattice Quantum Chromodynamics (USQCD) Collaboration, a large partnership founded in 1999 to create and use Lattice QCD software on large-scale computers.

Detmold and Shanahan, both active members of the USQCD Collaboration, have been working to prepare for the exascale era for years with other supercomputers across the world, including the ALCF’s Theta system. Lattice QCD research is a computationally intensive process, and exascale computing power promises to alleviate bottlenecks and other hardware limitations.

Much work has been done on these codes at MIT with former Argonne postdoctoral researcher Denis Boyda assisting. Modeling and testing have also occurred on ALCF’s Sunspot test and development system, which is outfitted with the exact same hardware as Aurora. One of Aurora’s advantages as a next-generation supercomputer is its powerful Intel graphics processing units, which are particularly useful for workloads involving AI, machine learning and big data.

“Aurora will enable us to scale up and deploy custom machine learning architectures developed for physics to exascale for the first time,” Shanahan said. “The hope is that this will enable calculations in nuclear physics that are not computationally tractable with traditional approaches, but it will also represent the first at-scale application of machine learning at all in this context.”

While the Standard Model has had a profound impact on science, there are still fundamental mysteries at the root of our understanding of the universe. Unraveling the mystery of dark matter will be a game changer for many areas of research, but Detmold reminded us that fundamental science goes beyond mere application.

“Basic curiosity is driving what we do,” he said. “Understanding how nature works for the sake of understanding it is important.”