Boosting computing power for the future of particle physics

Prototype machine-learning technology co-developed by MIT scientists speeds processing by up to 175 times over traditional methods.

A new machine learning technology tested by an international team of scientists including MIT Assistant Professor Philip Harris and postdoc Dylan Rankin, both of the Laboratory for Nuclear Science, can spot specific particle signatures among an ocean of Large Hadron Collider (LHC) data in the blink of an eye.

Sophisticated and swift, the new system provides a glimpse into the game-changing role machine learning will play in future discoveries in particle physics as data sets get bigger and more complex.

The LHC creates some 40 million collisions every second. With such vast amounts of data to sift through, it takes powerful computers to identify those collisions that may be of interests to scientists, whether, perhaps, a hint of dark matter or a Higgs particle.

Now, scientists at Fermilab, CERN, MIT, the University of Washington, and elsewhere have tested a machine-learning system that speeds processing by 30 to 175 times compared to existing methods.

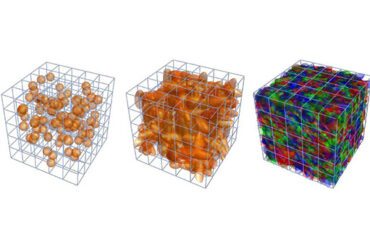

Such methods currently process less than one image per second. In contrast, the new machine-learning system can review up to 600 images per second. During its training period, the system learned to pick out one specific type of postcollision particle pattern.

“The collision patterns we are identifying, top quarks, are one of the fundamental particles we probe at the Large Hadron Collider,” says Harris, who is a member of the MIT Department of Physics. “It’s very important we analyze as much data as possible. Every piece of data carries interesting information about how particles interact.”

Those data will be pouring in as never before after the current LHC upgrades are complete; by 2026, the 17-mile particle accelerator is expected to produce 20 times as much data as it does currently. To make matters even more pressing, future images will also be taken at higher resolutions than they are now. In all, scientists and engineers estimate the LHC will need more than 10 times the computing power it currently has.

“The challenge of future running,” says Harris, “becomes ever harder as our calculations become more accurate and we probe ever-more-precise effects.”

Researchers on the project trained their new system to identify images of top quarks, the most massive type of elementary particle, some 180 times heavier than a proton. “With the machine-learning architectures available to us, we are able to get high-grade scientific-quality results, comparable to the best top-quark identification algorithms in the world,” Harris explains. “Implementing core algorithms at high speed gives us the flexibility to enhance LHC computing in the critical moments where it is most needed.”